New on-device plugins for UE5 from NVIDIA announced at Unreal Fest 2024

What's better than getting official support from NVIDIA themselves making the lives of fellow developers easier for Unreal Engine 5?

At the Unreal Fest 2024, Team Green released new on-device plugins to help streamline the integration process of AI-powered MetaHuman characters on Windows PCs through NVIDIA ACE.

For those who want to get some head start and understanding of the latest NVIDIA ACE innovations, there's this sample project that you can try out to learn through the phases of integrating ACE into games and applications.

As part of it, the on-device ACE plugins include Audio2Face-3D for lip sync and facial animation, Nemotron Mini 4B Instruct model for response generation, and Retrieval-augmented generation (RAG) for contextual responses.

Developers can build databases for intellectual property, generate low-latency responses, and synchronize them with MetaHuman facial animations in Unreal Engine 5. These microservices are optimized to run efficiently on Windows PCs with low latency and minimal memory usage.

The new plugins will be available soon but you can a head start by reading this.

On the other hand, Autodesk Maya is also getting the Audio2Face-3D plugin for the best audio-based facial animations.

Developers now also have access to the new Audio2Face-3D plugin for AI-driven facial animations in Autodesk Maya. This plugin features an intuitive, streamlined interface that makes creating avatars in Maya more efficient and quicker.

Additionally, it includes source code, enabling developers to tailor the plugin for their preferred digital content creation (DCC) tools.

Lastly, we have the latest Unreal Engine 5 renderer microservice in NVIDIA ACE, which is now in early access and supports the NVIDIA Animation Graph Microservice and Linux.

Animation Graph is a microservice that integrates with other AI models to create a conversational pipeline for characters, handling RAG architectures and maintaining both context and dialogue history.

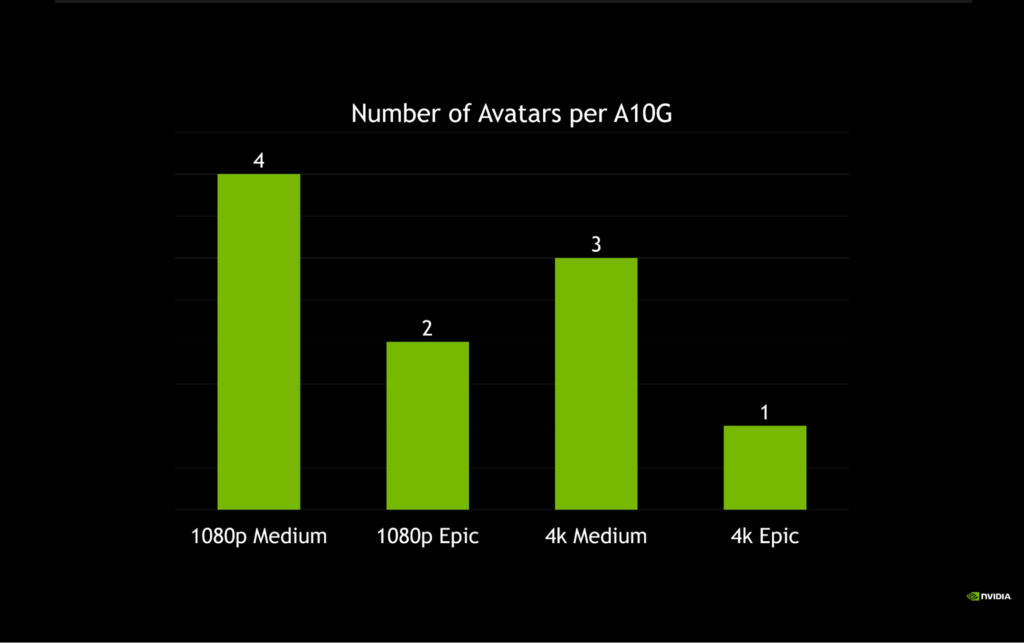

With UE5 pixel streaming support, developers can run MetaHuman characters on cloud servers and stream rendered frames and audio to any browser or edge device using Web Real-Time Communication (WebRTC).

To begin working with the Unreal Engine 5 renderer microservice, apply for early access then grab the NIM Microservice package.