AMD @ Advancing AI 2024: 5th Gen EPYC, Instinct MI325X, and more

AMD has concluded its Advancing AI 2024 keynote by launching a bunch of new industrial and business-ready hardware to tackle the growing demands of the world.

First, we have the new 5th Gen EPYC processors or formerly known as "Turin" back when it was first publicly disclosed at COMPUTEX 2024.

This Zen 5-based family of chips is a monstrous powerhouse by delivering more than 2.7x the performance of competitors through its best-in-class flagship EPYC 9965 packing a whopping 192 cores and 384 threads.

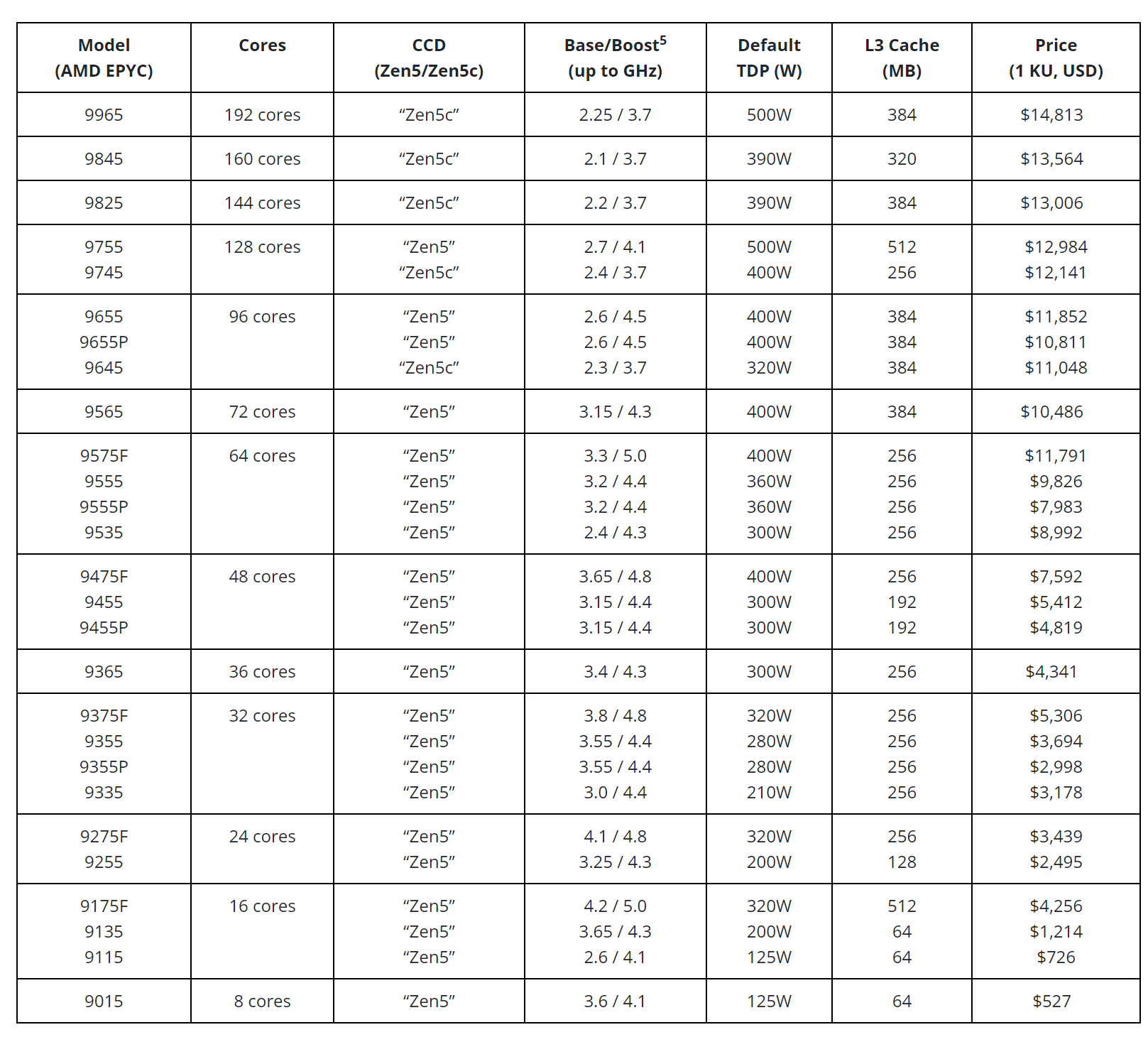

AMD will also be offering a wide range of SKUs with different core counts, boost clocks, L3 cache capacity, and more to get everyone onto the Team Red train. Heck, the most "affordable" one only comes with 8 cores will be pretty appealing to those with lower processing requirements but also favors pro-class feature sets.

The platform will also offer 12-channel DDR5 memory support per CPU with speeds of up to DDR5-6400 in addition to AVX-512 support with full 512b data path.

It is created to power through all sorts of modern data center tasks with up to 17% better Instructions Per Clock (IPC) performance for enterprise and cloud workloads, and up to 37% higher IPC for AI and HPC workloads, compared to the previous generation.

With the EPYC 9965 processor, customers can expect:

- Up to 4x faster video transcoding.

- Up to 3.9x quicker scientific insights for HPC applications.

- 1.6x better performance per core for virtualized infrastructure.

For AI-driven applications, the EPYC 9965 offers up to 3.7x the performance of competitor processors in end-to-end AI workloads. For small to medium generative AI models, such as Meta’s Llama 3.1-8B, the EPYC 9965 provides nearly double the throughput.

The AI-focused EPYC 9575F, with its 5GHz frequency boost, accelerates large-scale AI clusters, delivering up to 700,000 more inference tokens per second.

Here's the current lineup of the 5th Gen EPYC processors alongside the reference price.

Next we have the long-awaited Instinct MI325X accelerator made with CDNA 3 architecture that enables customers and partners to train and develop optimized AI solutions at the system, rack, and data center levels.

The 256GB of HBM3E with 6.0TB/s throughput is a great start as it trounces the current competition, the NVIDIA H200, with 1.8x more capacity and 1.3x more bandwidth as well as 1.3x greater peak FP16 and FP8 compute performance, resulting stronger inference outcome at 1.3x improvement on Mistral 7B at FP16, 1.2x on Llama 3.1 70B at FP8, and 1.4x on Mixtral 8x7B at FP16 over the H200 model.

It is expected to be made available beginning Q4 of this year with system availability from platform providers like Dell Technologies, Hewlett Packard Enterprise, Gigabyte, Lenovo, and others starting in Q1 2025.

AMD also previewed the upcoming Instinct MI350 lineup powered by the CDNA 4 architecture with giant leaps of performance of up to 35x improvement while armed with up to 288GB of HBM3E memory and is set for release in the second half of 2025.

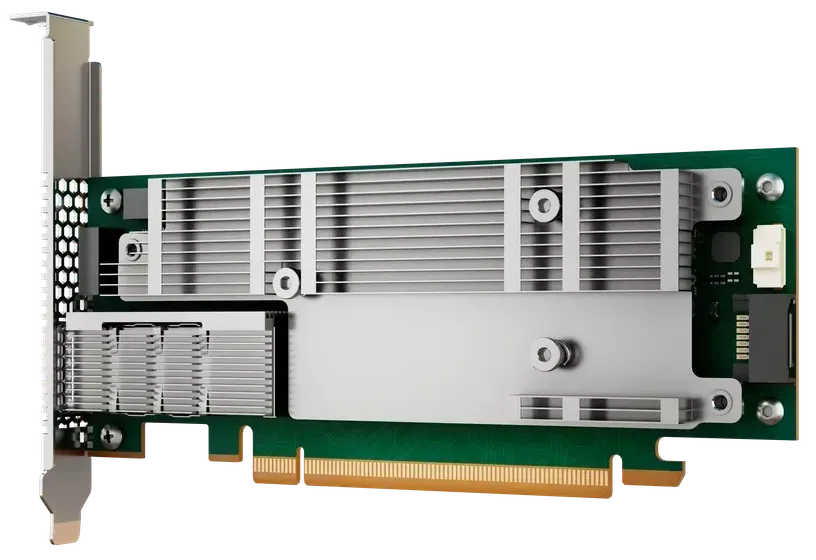

Moving onto the DPU department, we have the new Pensando Salina DPU now in its 3rd generation phase equipped with the latest technology to offer 2x the performance, bandwidth, and scale of the previous generation, supporting 400G throughput for rapid data transfer and enabling enhanced security and scalability for data-driven AI applications.

Meanwhile, the back-end gang welcomes the Pensando Pollara 400 NIC is UEC-ready and powered by the P4 Programmable engine to be in leadership of performance, scalability, and efficiency for accelerator-to-accelerator communication.

These programmable DPUs for hyperscalers will be made available in Q4 this year with general availability in the first half of 2025.

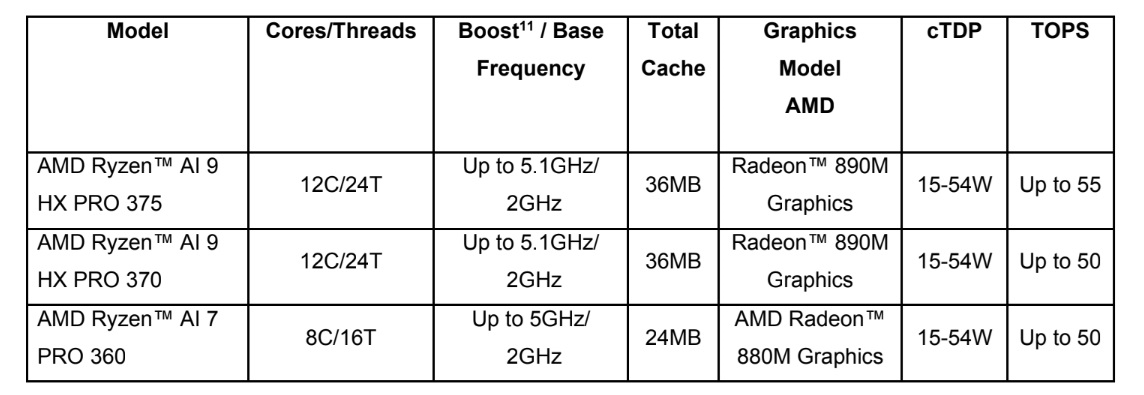

For the business folks, we finally have the PRO versions of the Ryzen AI 300 series processors where two 9-tier and one 7-tier processor will be powering business-ready laptops beginning later this year.

In terms of specs, they are more or less identical to the consumer-facing non-PRO chips therefore no performance discrepancies here. The initial PRO-exclusive security features like Secure Processor, Shadow Stack, and Platform Secure Boot is still here to stay while new stuff is being added, including Cloud Bare Metal Recovery for seamless system restore via the cloud as well as AMD Device Identity tailored towards supply chain protection.

Watch Dog Timer is now better than ever at providing additional detection and recovery processes while AI-based malware detection backed by the immense NPU capabilities within the Ryzen AI 300 series processors is now available via PRO Technologies with select ISV partners,

For the software size, AMD ROCm is getting something new as well via the version 6.2 update bringing in FP8 datatype, Flash Attention 3, and Kernel Fusion that enables performance uplifts of up to 2.4x in inference and 1.8x in LLM training.

The ecosystem supports widely used frameworks like PyTorch, Triton, Hugging Face, and generative AI models such as Stable Diffusion 3 and Meta Llama 3.1, ensuring broad out-of-the-box compatibility with its Instinct accelerators.